A staple offering within Cisco’s device portfolio is their Fabric Extender (FEX) Technology. In essence, it allows you to do a few very cool things:

1.Minimize the number of switches you need to manage. FEX devices connect to upstream Nexus switches (5/6/7/9K’s) and are managed through them. All direct connections from servers into FEX devices are seen as FEX virtual ports on the upstream Nexus switches. For example, if you had four top of rack switches going back to two Nexus core switches, you could replace the four top of rack switches with FEX devices and now only have to manage the two Nexus core switches.

2.Significantly increase the amount of interfaces your upstream switches are able to support. As in the example above, if you had two Nexus 5672 (each with 48 10Gbps ports each) and then connected four Dell B22 FEX modules (supports 16 1/10Gbps connections to servers in a Dell blade server chassis, per module), you are now managing 112 ports on each Nexus switch (48 physical ports + 64 virtual ports (16 per FEX)). This number can actually be much larger dependent on the type of Nexus and the type and number of FEX devices.

3.Add flexibility while minimizing cables, power consumption and physical network interface cards (NICs). These positive changes are especially seen when coupling this technology with migrating from top of rack switches and physical servers to a blade server technology with integrated FEX modules.

At this point, most will agree it’s a cool and useful technology that has been continuously used and implemented in data center architectures. So, why do I want to write about it? I’ve done both implementations from scratch as well as migrations from older Cisco Catalyst technologies and I’ve found little information about how to do a successful migration. I’ve also been directly asked about by a few folks how to do it, so… two birds… one stone. I’m going to cover some basic Nexus/FEX configurations that should get you started on your way to a successful and happy migration, as well as point out some pitfalls that might save you from some frustrations and being overly FEX’ed!

Scenario Environment

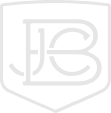

Pre-Migration

- Two Cisco Nexus 5k switches as your core switches (layer 2) with vPC connections (and vPC management link) already established

- Two Dell blade server chassis (each with two Cisco 3130X Catalyst switch blades (layer 2)). Each Cisco switch has one 10 Gbps uplink to each Nexus switch

- Various full and half height server blades consisting of virtual servers on ESX installed server blades and physical servers on server blades

- Upstream routing/firewall devices

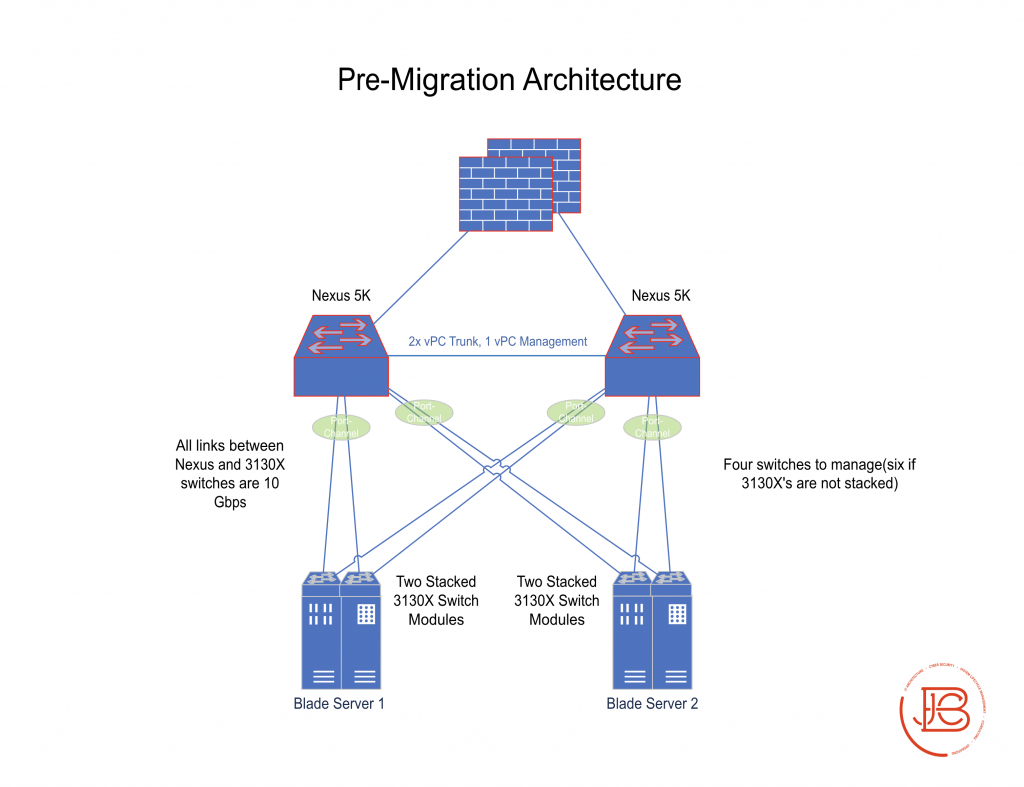

Post-Migration

- Two Cisco 5k switches as your core switches (layer 2) with vPC connections (and vPC management link) already established

- Two Dell blade server chassis (each with two FEX B22 modules). Each FEX has 8 connections (4 to each Nexus switch)

- Various full and half height server blades consisting of virtual servers on ESX installed server blades and physical servers on server blades

- Upstream routing/firewall devices

The Real Work

There is a decent amount of prep work that can be done on the Nexus switches prior to migration of the 3130X’s to B22’s. This consists of:

- Enabling the FEX feature on the Nexus cores

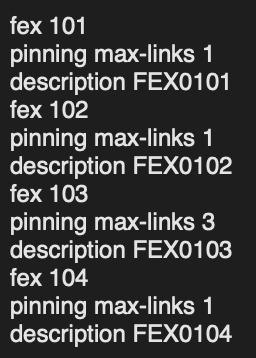

- Creating the FEX groupings (1 grouping per FEX module) on the Nexus cores. I also put a max-links command 1 command which is needed when using the FEX with port-channels.

- Configuration of vPC’s between the Nexus cores if you don’t already have vPC configurations.

- See here for vPC configuration help: https://www.cisco.com/c/en/us/products/collateral/switches/nexus-5000-series-switches/configuration_guide_c07-543563.html

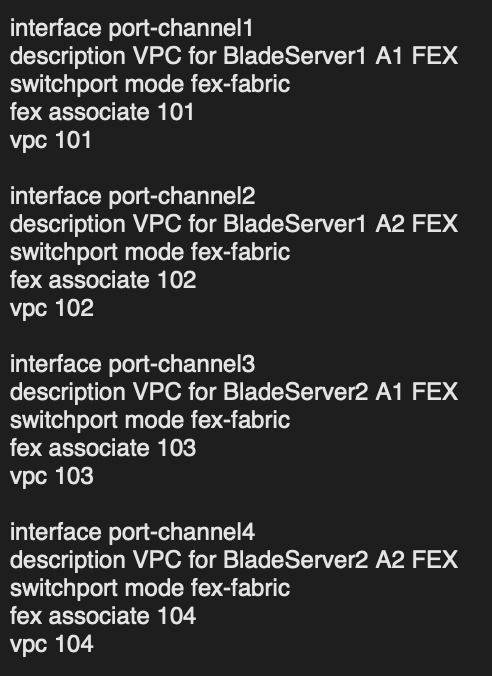

- Creating Port Channels for each FEX grouping on the Nexus cores. This involves assigning the port channel as utilizing the fex-fabric, associating it with a specific FEX grouping created above, and assigning it a vPC number. I like to keep all the numbers the same across the FEX groups/port channels so it is easy to remember ( and even the port-channels, which I didn’t do in this example ☹️).

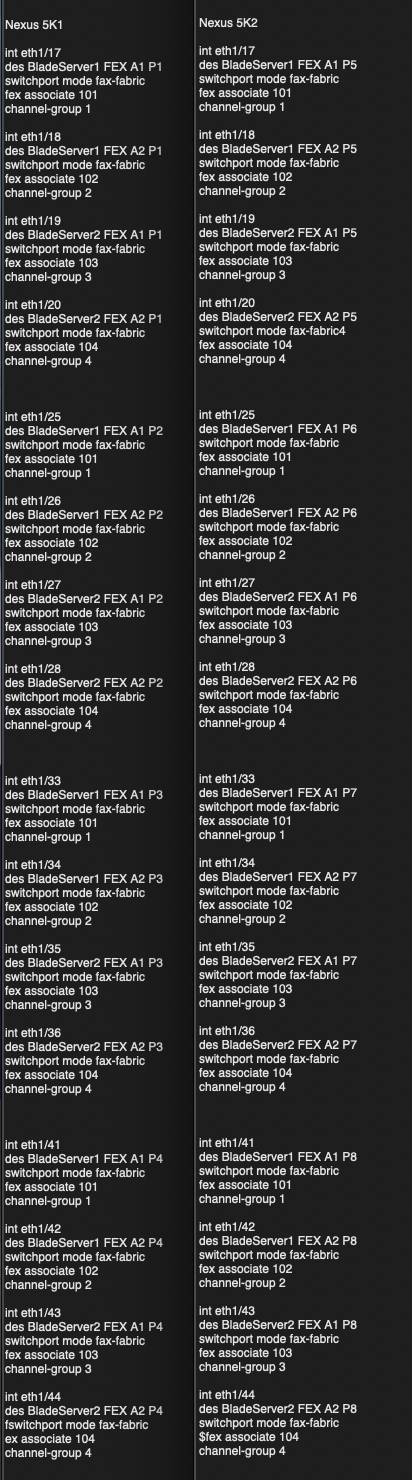

- Configuration of physical ports on Nexus cores as FEX ports and assigning them to the proper port channel. In this case I like to break up the port groups across the the various ASIC chips, in the very highly unlikely event of a chip failure. The ASIC’s are grouped into ports of 8 (Ports 1-8 are one ASIC, 9-16 are another, and so on). I configure each port channel to have all it’s connection going into separate ASICS. I will say I haven’t run into an issue with an ASIC failure yet, so at this point, it’s more of a superstition ?.

There is no work that needs to be done on the FEX side. However, once you power them on and cable them up to the upstream Nexus switches, they require no additional configuration. They will take a little time downloading software from the Nexus devices. You can check this status with the “show fex” command. Also, since they get their software from the Nexus switch, there is no need to do any software updates on them individually. Any patches/updates are bundled with the NX-OS software that you install on your Nexus switch, another time/resource savings!

Below are all the configurations used in the above screenshots (with corrected port channel numbers):

Nexus 5K1

feature fex

system private-vlan fex trunk #if using PVLANs

fex 101

pinning max-links 1

description FEX0101

fex 102

pinning max-links 1

description FEX0102

fex 103

pinning max-links 3

description FEX0103

fex 104

pinning max-links 1

description FEX0104

interface port-channel101

description VPC for BladeServer1 A1 FEX

switchport mode fex-fabric

fex associate 101

vpc 101

interface port-channel102

description VPC for BladeServer1 A2 FEX

switchport mode fex-fabric

fex associate 102

vpc 102

interface port-channel103

description VPC for BladeServer2 A1 FEX

switchport mode fex-fabric

fex associate 103

vpc 103

interface port-channel104

description VPC for BladeServer2 A2 FEX

switchport mode fex-fabric

fex associate 104

vpc 104

int eth1/17

des BladeServer1 FEX A1 P1

switchport mode fax-fabric

fex associate 101

channel-group 101

int eth1/18

des BladeServer1 FEX A2 P1

switchport mode fax-fabric

fex associate 102

channel-group 102

int eth1/19

des BladeServer2 FEX A1 P1

switchport mode fax-fabric

fex associate 103

channel-group 103

int eth1/20

des BladeServer2 FEX A2 P1

switchport mode fax-fabric

fex associate 104

channel-group 104

int eth1/25

des BladeServer1 FEX A1 P2

switchport mode fax-fabric

fex associate 101

channel-group 101

int eth1/26

des BladeServer1 FEX A2 P2

switchport mode fax-fabric

fex associate 102

channel-group 102

int eth1/27

des BladeServer2 FEX A1 P2

switchport mode fax-fabric

fex associate 103

channel-group 103

int eth1/28

des BladeServer2 FEX A2 P2

switchport mode fax-fabric

fex associate 104

channel-group 104

int eth1/33

des BladeServer1 FEX A1 P3

switchport mode fax-fabric

fex associate 101

channel-group 101

int eth1/34

des BladeServer1 FEX A2 P3

switchport mode fax-fabric

fex associate 102

channel-group 102

int eth1/35

des BladeServer2 FEX A1 P3

switchport mode fax-fabric

fex associate 103

channel-group 103

int eth1/36

des BladeServer2 FEX A2 P3

switchport mode fax-fabric

fex associate 104

channel-group 104

int eth1/41

des BladeServer1 FEX A1 P4

switchport mode fax-fabric

fex associate 101

channel-group 101

int eth1/42

des BladeServer1 FEX A2 P4

switchport mode fax-fabric

fex associate 102

channel-group 102

int eth1/43

des BladeServer2 FEX A1 P4

switchport mode fax-fabric

fex associate 103

channel-group 103

int eth1/44

des BladeServer2 FEX A2 P4

switchport mode fax-fabric

fex associate 104

channel-group 104

Nexus 5K2

feature fex

system private-vlan fex trunk #if using PVLANs

fex 101

pinning max-links 1

description FEX0101

fex 102

pinning max-links 1

description FEX0102

fex 103

pinning max-links 3

description FEX0103

fex 104

pinning max-links 1

description FEX0104

interface port-channel101

description VPC for BladeServer1 A1 FEX

switchport mode fex-fabric

fex associate 101

vpc 101

interface port-channel102

description VPC for BladeServer1 A2 FEX

switchport mode fex-fabric

fex associate 102

vpc 102

interface port-channel103

description VPC for BladeServer2 A1 FEX

switchport mode fex-fabric

fex associate 103

vpc 103

interface port-channel104

description VPC for BladeServer2 A2 FEX

switchport mode fex-fabric

fex associate 104

vpc 104

int eth1/17

des BladeServer1 FEX A1 P5

switchport mode fax-fabric

fex associate 101

channel-group 101

int eth1/18

des BladeServer1 FEX A2 P5

switchport mode fax-fabric

fex associate 102

channel-group 102

int eth1/19

des BladeServer2 FEX A1 P5

switchport mode fax-fabric

fex associate 103

channel-group 103

int eth1/20

des BladeServer2 FEX A2 P5

switchport mode fax-fabric

fex associate 104

channel-group 104

int eth1/25

des BladeServer1 FEX A1 P6

switchport mode fax-fabric

fex associate 101

channel-group 101

int eth1/26

des BladeServer1 FEX A2 P6

switchport mode fax-fabric

fex associate 102

channel-group 102

int eth1/27

des BladeServer2 FEX A1 P6

switchport mode fax-fabric

fex associate 103

channel-group 103

int eth1/28

des BladeServer2 FEX A2 P6

switchport mode fax-fabric

fex associate 104

channel-group 104

int eth1/33

des BladeServer1 FEX A1 P7

switchport mode fax-fabric

fex associate 101

channel-group 101

int eth1/34

des BladeServer1 FEX A2 P7

switchport mode fax-fabric

fex associate 102

channel-group 102

int eth1/35

des BladeServer2 FEX A1 P7

switchport mode fax-fabric

fex associate 103

channel-group 103

int eth1/36

des BladeServer2 FEX A2 P7

switchport mode fax-fabric

fex associate 104

channel-group 104

int eth1/41

des BladeServer1 FEX A1 P8

switchport mode fax-fabric

fex associate 101

channel-group 101

int eth1/42

des BladeServer1 FEX A2 P8

switchport mode fax-fabric

fex associate 102

channel-group 102

int eth1/43

des BladeServer2 FEX A1 P8

switchport mode fax-fabric

fex associate 103

channel-group 103

int eth1/44

des BladeServer2 FEX A2 P8

switchport mode fax-fabric

fex associate 104

channel-group 104

The Wrap Up

At this point you are ready to rock and roll, turn up your FEX’s, and start swinging cables over, right? Not quite yet!!! There’s a few gotcha’s that hopefully we can help you avoid prior to getting FEX’ed.

- First thing, copy your whole config off of any of the switches you are migrating to FEX devices (and the upstream Nexus switches you are connecting then too). Keep this handy on whatever device you will be using to configure the switches from.

- Second, make sure you know or create local accounts on your network devices that are involved in this migration (Nexus and Catalyst switches). In this specific architectural scenario, the AAA servers are downstream of the FEX devices. So once the Catalyst switches go down and the FEX are stood up, any configuration problems may cause them to be inaccessible. You will be glad you have local credentials.

- Third, any ACL’s you have will be in a slightly different format when migrating from Catalyst to Nexus. Primarily, the differences are they no longer need the “extended” tag in the name, and “host” statements and wildcard masks are no longer used, instead relying on CIDR notation. Luckily the Nexuses….Nexus’s…..Nexi (we’ll go Nexi ) make that translation for you, but you will still have to remove the “extended” tag in any ACL statements.

- If you have ACL’s that need to be moved over, there is a chance you have VLAN Access Maps which are mapped back to the ACL’s or VLAN ACL’s (VACL’s). There are some differences between the platforms. VLAN Access Maps in Catalyst normally are configured with two statements, one that matches the traffic you want to permit, and then one that is used to deny traffic. In Nexus, there is only the permit statement, anything that doesn’t match it, is dropped. One cute caveat with this is if you are using VACL’s, the Nexus will allow you to add log statements to your VACL’s. Make sure to add them where applicable.

- When coupling the VACLS and VLAN Access Maps, make sure to add new VACLS and VLAN Access Maps to the Nexus cores to apply the appropriate VLAN Filter statements. Make sure all your hard work of moving over VACLs/Access Maps didn’t go to waste.

- If you are using private VLANS (PVLANs), you might be in for a treat. Not all PVLAN types are supported with device connections to the FEX modules/devices!!! I’ve run into situations where it will not pass traffic to hosts as expected when you have both promiscuous and isolated hosts in the same VLAN on a vSwitch in an ESX environment when connecting to the ESX server over a trunk port. There is documentation out there but you have to dig to find it. My recommendation is, if you are using PVLANs with the FEX modules do some testing and split your PVLANs that have multiple types in them so that only promiscuous ports go in one PVLAN, and create a new separate PVLAN for isolated hosts. Tread carefully!

- Lastly, make sure you have implemented the “system private-vlan fex trunk” command on any virtual interfaces that are trunks carrying PVLANs. If you don’t, the interfaces will show as connected, but will not pass the traffic as expected.

So, there you go, a whole lot of FEX fun! One last key point before attempting to do your migration (and it is really a good practice with any configuration change): Don’t blow away any of the old configurations in the Nexus switches (old uplinks, port groups, etc…) too quickly. You might need to roll back to the old switches at some point in the migration, especially if you fall into one of the gotcha’s mentioned above and you need to bring everything back to normal operations while trying to isolate the issue.

References

Some great resources on FEX’s, Nexus Switches, and ASIC’s

- https://www.cisco.com/c/en/us/solutions/data-center-virtualization/fabric-extender-technology-fex-technology/index.html

- https://www.networkstraining.com/vlan-access-map-example-configuration/

- https://www.cisco.com/c/en/us/products/collateral/switches/nexus-5000-series-switches/configuration_guide_c07-543563.html

- https://www.dell.com/en-us/work/shop/povw/cisco-nexus-fex

- https://packetpushers.net/cisco-nexus-5500-front-panel-port-to-internal-asic-layout-from-brkarc-3452/

- https://www.manualslib.com/manual/1254292/Cisco-Nexus-5000-Series.html?page=84

- https://www.netsolutionworks.com/datasheets/Cisco-Nexus-B22-Blade-Fabric-Extender-for-Dell-Product-Overview-and-Spec-Sheet.pdf

Find out more about J.B.C.’s Cyber&Sight™ blog here.